After attending the course "Examinations with Multiple Choice Items"1, I gained valuable insights into writing effective multiple-choice questions for university teaching. Having just completed another round of exams, I'd now like to share what I've learned.

Pros and Cons of Multiple-Choice Exams

The advantages of multiple-choice questions are well known. Most importantly, they are a quite efficient form of examination because they are so structured and unambiguous:

- Students can demonstrate their knowledge without writing lengthy essays. In terms of ink-to-knowledge ratio, multiple-choice questions are more efficient than open-ended questions.

- For instructors, grading of multiple-choice items can be delegated to teaching assistants (or even automated if the exam is digital).

Beyond time efficiency, I've come to value several other benefits of multiple-choice questions:

- They work in a variety of settings (pen-and-paper exams, live Kahoot quizzes during lectures, or self-paced assessments). This versatility makes it easier to reuse items across different formats.

- Multiple-Choice questions and answers can be automatically shuffled, making it harder for students to cheat by copying from each other.

Finally, I believe that interoperability with AI tools is an important consideration:

- AI tools like ChatGPT or NotebookLM can now effectively generate multiple-choice questions from slides and lecture notes, while I am not so sure about their ability to create smart open-ended questions.

- AI tools are also valuable for quality control: they can verify that questions are clear, that only one answer is correct, and that the solution I give to the teaching assistants matches the correct answer.

In my experience, AI tools have made the creation of multiple-choice exams quite efficient, although I should note that I don't have rigorous evidence that AI tools work better specifically for multiple-choice questions compared to other formats.

I should also note the disadvantages of multiple-choice questions:

- It seems obvious that only some types of learning goals can be assessed with multiple-choice questions (typically, remembering facts and terminology, or estimating quantities).

- Often, for a given course, there is a limited number of questions that can be constructed from the course material. When creating a new exam in the following year, I found it hard to come up with additional multiple-choice questions that are equally relevant.

- If the items are not carefully designed, guessing can be a viable strategy to get a high score. While some teachers try to discourage guessing by penalizing wrong answers, I learned in the course that is not a good solution, because it might discriminate between students with different risk attitudes (which, as was stressed in the course, is sometimes correlated with gender).

How to Write Good Multiple-Choice Questions

In the course, we submitted some of our own multiple-choice questions, which were then analyzed by the other participants.

One insight I gained through this exercise was that people from another discipline are often best positioned to evaluate multiple-choice item design. If they can solve a question through guessing, it likely contains unintentional clues in either the question or answer options.

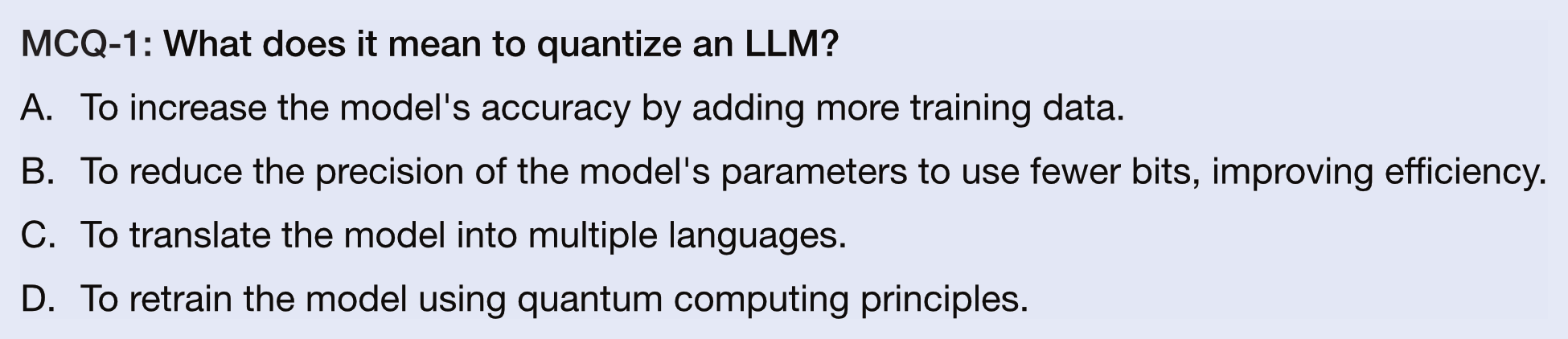

As an example, let's analyze the following item that was generated by ChatGPT (https://chatgpt.com/share/6856a026-2bf8-8012-83de-b6a13daeeebc):

Some cues that give away the correct answer:

- Not all distractors are equally plausible. For example, it's unlikely that the correct answer will have anything to do with quantum computing.

- The correct answer is often the longest and most precise option, which can unintentionally signal to students which answer to choose.

Some other tips and tricks I learned:

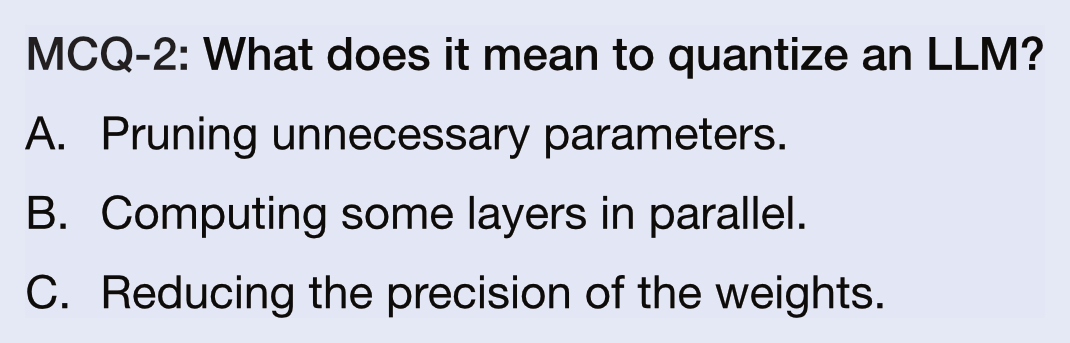

- Research shows that multiple-choice exams have a diminishing return on the number of distractors, or even a negative return. Three options are typically sufficient, and adding a fourth option increases reading time without making the assessment more reliable. Better use that time for additional questions!

- Instruct students to select the "best" answer rather than the "correct" answer. This allows me to keep the correct option concise while making the distractors as plausible as possible. For instance, answer B in the example above isn't technically accurate (quantization can apply to activations, not just parameters), but it's clearly the "best" option among the choices provided.

Based on these insights, here's an improved version of the item:

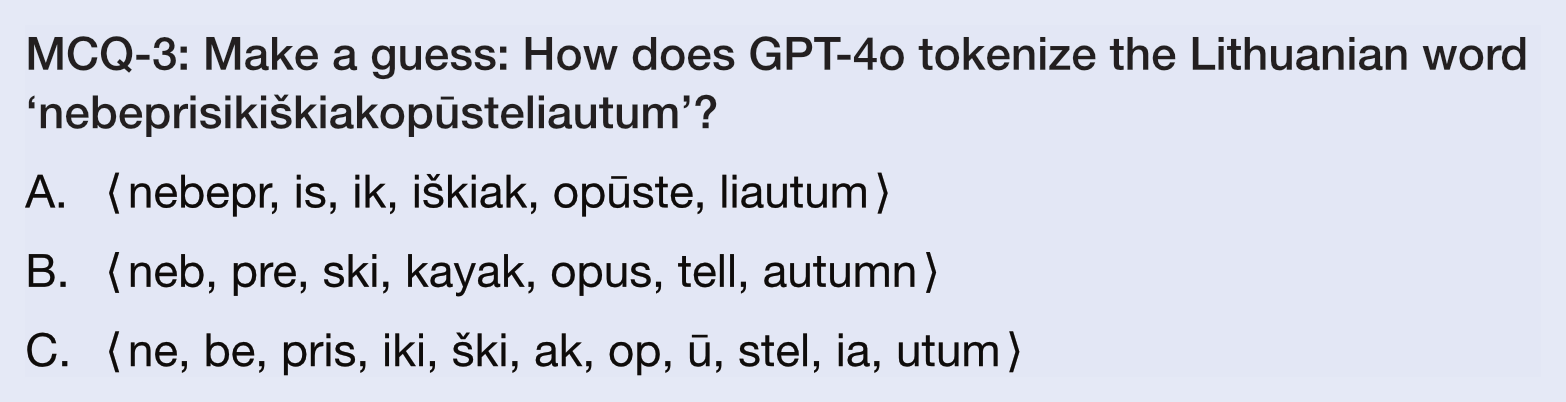

Interestingly, asking students to select the "best" answer also enables more creative items, such as estimation questions or questions that require educated guessing beyond simple recall. Here's an example:

I like this type of question because it requires higher-level thinking beyond memorization. However, a student in a recent exam-prep session told me that they find the question unfair (because, as they said, they do not speak Lithuanian). This reaction likely stems from the fact that students are conditioned to view multiple-choice questions as tests of memorization only. Therefore, it's important to prepare students for the question format in advance.

The K-prime Question Format

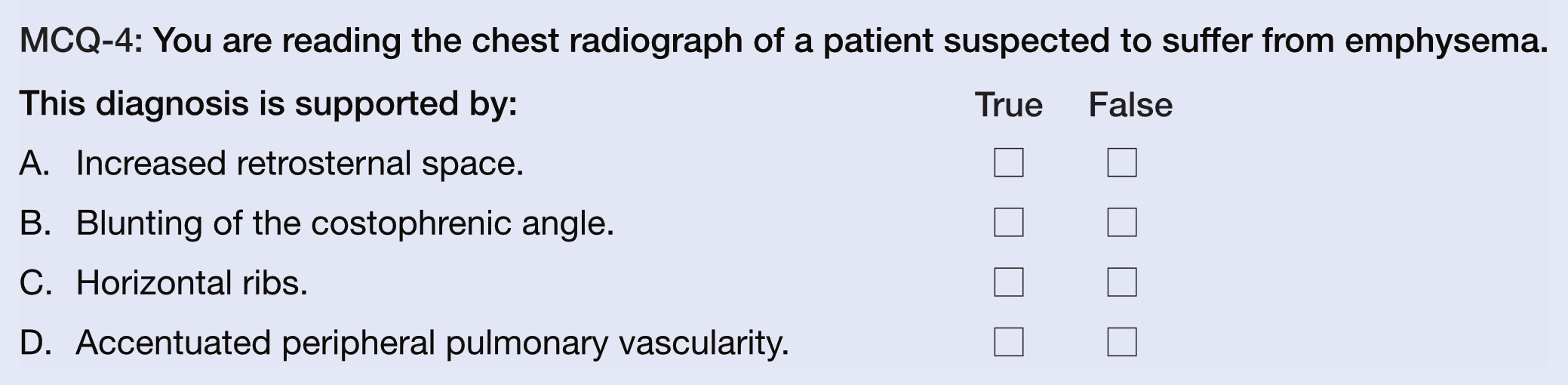

In the course, I learned about an interesting question format called "K-prime".2 K-prime questions might be a Swiss invention, as they were first described in the paper "The Swiss Way to Score Multiple True-False Items" (Krebs, 1997).

In K-prime items, every answer option needs to be separately rated as true or false by the students. Any number of options can be true (including zero or all of them). There are always four options, and a student gets 2 points if they correctly rated all the four options. To reward partial knowledge, the student gets 1 point if three out of four options are correctly rated. However, they get 0 points if they rate two or fewer options correctly, which makes guessing ineffective.

In medical education, K-prime questions are particularly useful because many phenomena have multiple causes or factors, as shown in this example from Krebs (1997):

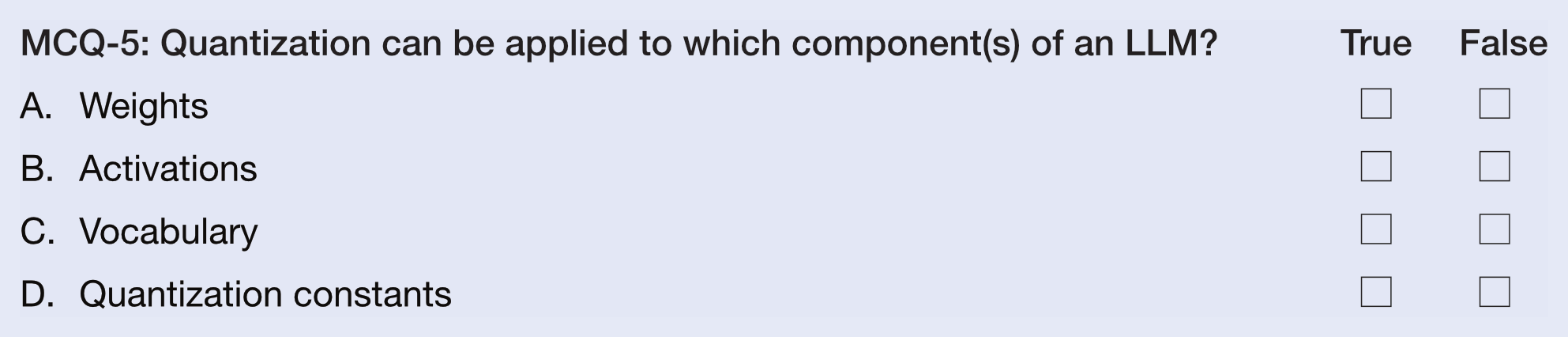

K-prime questions could also work well for computer science topics:

After implementing K-prime questions myself (in a math refresher course), I found that they offer several advantages over traditional multiple-choice questions:

- Question writing becomes easier, because the options are independent of each other. While there may be some interdependence, this interdependence stems from the content itself rather than from the format of the question (e.g., using a process of elimination to exploit the format).

- It will likely become easier to reuse questions in future exams, since answer options can be varied independently from each other. Also, for certain topics, K-prime questions appear to be more straightforward to create than traditional multiple-choice questions, allowing for a larger question pool overall.

- The grading scheme discourages guessing, making sure that students' individual risk attitudes don't affect their grade.

Tips and Tricks for Writing Good K-prime Questions

Based on my experience writing K-prime questions and supervising teaching assistants who created their own, here are some key tips for writing effective K-prime questions:

- Avoid negations (or double negations). Since any statement may be true or false, there is no need to use negations to make a statement fit the item format.

- Avoid compositions of several propositions (e.g., "A and B", or "A, because B"). Simply split them into separate statements.

- Make sure to balance true and false options: I noticed that AI tools like ChatGPT default to writing correct options, and incorrect options need to be written manually. One explanation might be that unlike standard multiple-choice questions, LLMs haven't seen many K-prime questions in their training data. Since K-prime questions lack the formal constraint that a single option must be correct, LLMs are more influenced by their "truth bias" than anything else. Interestingly, a study has found that human exam creators have a similar bias, choosing to make 60.5% of the options true on average (Krebs, 2019).

Conclusion

I have become a believer in multiple-choice questions, but it's clear that they need to be used in combination with other question types. Due to their efficiency and ease of grading, I am planning to keep them in the mix, so that I can free up resources for more creative and open-ended questions in the rest of the exam.

Answer key for the examples:

MCQ-1: B, MCQ-2: C, MCQ-3: C, MCQ-4: A, B, C, MCQ-5: A, B, D

This post was written for the course "Teaching Skills – Systematic Development of Teaching Competence" at University of Zurich.

References

René Krebs. The Swiss Way to Score Multiple True-False Items: Theoretical and Empirical Evidence, pages 158–161. Springer Netherlands, Dordrecht, 1997. URL: https://doi.org/10.1007/978-94-011-4886-3_46, doi:10.1007/978-94-011-4886-3_46. ↩ 1 2

René Krebs. Prüfen mit Multiple Choice Kompetent planen, entwickeln, durchführen und auswerten. Hogrefe Verlag GmbH & Co. KG, 2019. ISBN 9783456759029. URL: https://elibrary.hogrefe.com/book/10.1024/85902-000, doi:10.1024/85902-000. ↩

- Organized by Antonia Bonaccorso and Tobias Halbherr of the ETH/UZH Didactica Program. ↩︎

- The name "K-prime" is derived from "K-type questions", which are common in medical education. K-type (as opposed to A-type) questions allow for multiple correct answers. The K-prime (or K') format improves over simple K-type questions by asking about the truth of every option individually. ↩︎